Understanding the Impact of Batch Normalization on AI Model Training

Stabilizing Training with Batch Normalization in AI Models

Batch normalization in AI models is a transformative technique that has become essential for stabilizing the training of deep networks. As businesses in Saudi Arabia and the UAE increasingly turn to Artificial Intelligence (AI) to drive innovation and efficiency, the application of batch normalization offers a reliable method to ensure that these AI models perform consistently and accurately. The technique addresses the issue of internal covariate shift, a phenomenon where the distribution of inputs to each layer in a network changes during training, leading to slower convergence and potential instability.

In the context of deep learning, where models can consist of hundreds or even thousands of layers, maintaining stable and efficient training is paramount. Batch normalization works by normalizing the inputs of each layer to have a mean of zero and a variance of one. This normalization process is performed for each mini-batch during training, hence the name “batch normalization.” By doing so, the technique reduces the sensitivity of the model to the initial learning rate and helps prevent the gradients from vanishing or exploding, which are common issues in deep networks.

For business executives and decision-makers in Riyadh, Dubai, and other leading business hubs in the Middle East, the application of batch normalization is not just a technical detail but a strategic advantage. Ensuring that AI models are trained efficiently and effectively allows companies to deploy AI solutions with confidence, knowing that their models will perform reliably in real-world scenarios. This is particularly critical in sectors like finance, healthcare, and logistics, where the accuracy and stability of AI-driven decisions can have significant operational and financial implications.

The Benefits of Batch Normalization in AI Models

The use of batch normalization in AI models offers several key benefits that enhance the overall performance and reliability of deep learning systems. One of the most significant advantages is the acceleration of the training process. By normalizing the inputs of each layer, batch normalization allows for higher learning rates, which in turn speeds up the convergence of the model. This is particularly important in time-sensitive applications where quick deployment of AI solutions is critical to maintaining a competitive edge.

Another crucial benefit of batch normalization is its ability to act as a regularizer, reducing the need for other forms of regularization such as dropout. Regularization is a technique used to prevent overfitting, where a model performs well on training data but poorly on unseen data. Batch normalization introduces a slight noise in the inputs during training, which helps the model generalize better to new data. This is especially valuable for businesses in Saudi Arabia and the UAE, where AI models are often used to make predictions or decisions in dynamic and evolving environments.

Moreover, batch normalization simplifies the tuning of hyperparameters, such as the learning rate. In the absence of batch normalization, selecting the right learning rate can be a delicate balancing act. Too high a learning rate can lead to divergence, while too low a rate can result in slow convergence. Batch normalization, by stabilizing the learning process, allows for a broader range of suitable learning rates, making the training process more robust and less prone to errors.

Applying Batch Normalization in Business AI Solutions

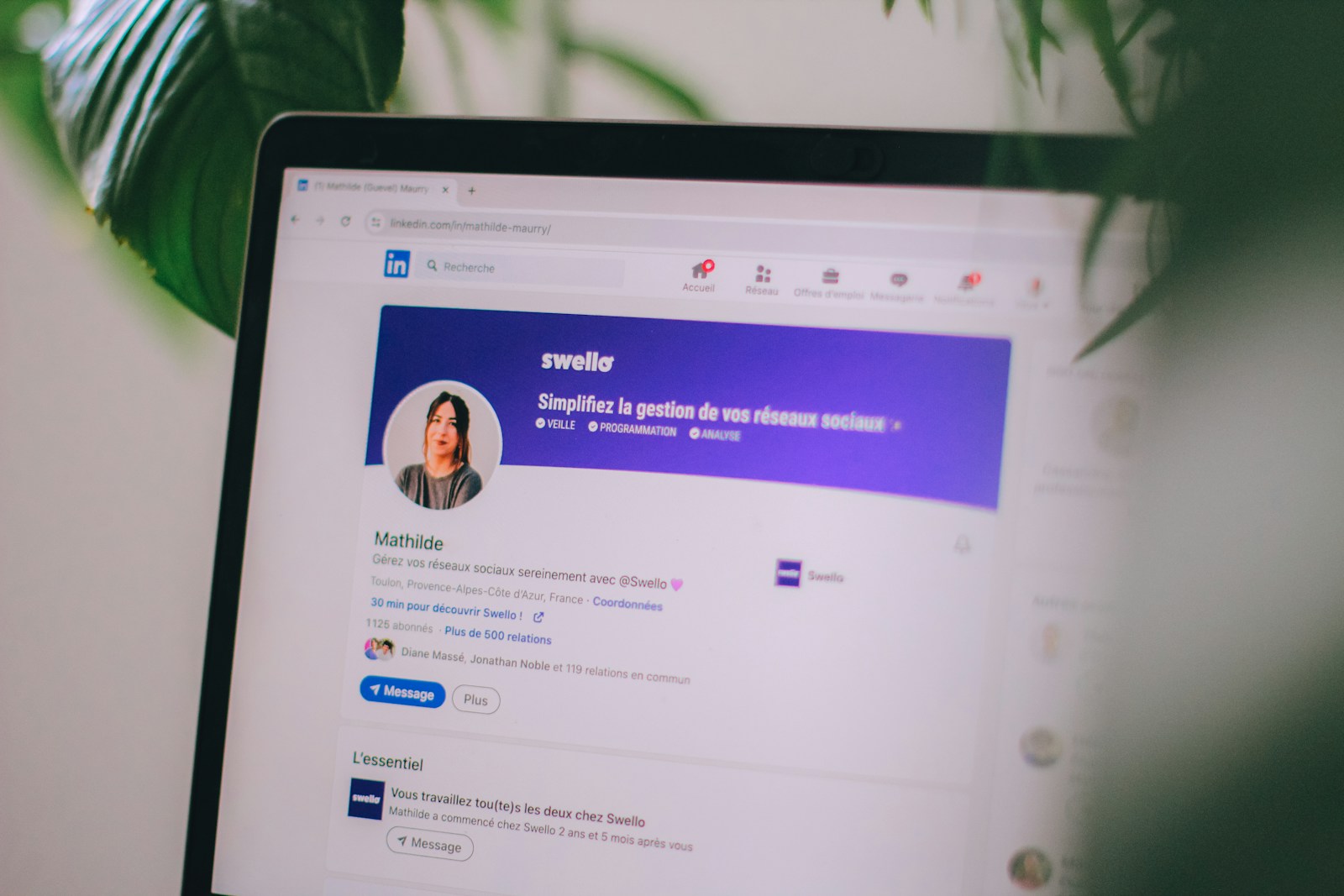

For companies looking to implement AI-driven solutions in Saudi Arabia, the UAE, Riyadh, Dubai, and beyond, the strategic application of batch normalization can significantly enhance the performance of their AI models. One best practice is to integrate batch normalization into every layer of the network, particularly in deep networks where the risk of vanishing or exploding gradients is higher. By ensuring that each layer’s inputs are normalized, businesses can maintain the stability of their models throughout the training process.

Another important consideration is the use of batch normalization during both training and inference. While batch normalization is typically associated with the training phase, applying it during inference (the model’s deployment phase) can further stabilize the model’s outputs. This is particularly important in real-time AI applications, such as predictive maintenance in manufacturing or personalized marketing in e-commerce, where consistent and reliable model performance is crucial for success.

Finally, businesses should consider the broader impact of batch normalization on their AI strategy. As AI continues to evolve and integrate into various aspects of business operations, techniques like batch normalization will play an increasingly important role in ensuring that AI models are not only powerful but also practical and reliable. This is especially true in the competitive markets of the Middle East, where companies that leverage advanced AI techniques can differentiate themselves and achieve long-term business success.

#BatchNormalization #DeepLearning #AIModelStability #BusinessAI #AIinSaudiArabia #AIinUAE #AdvancedAITechniques