The Importance of Addressing AI Bias in Modern Business

Understanding the Roots of Bias in AI Systems

AI Bias can manifest in various ways, from hiring algorithms that inadvertently favor certain demographics to customer service bots that misinterpret inquiries based on linguistic or cultural nuances. The implications of unchecked AI bias are far-reaching, potentially leading to legal challenges, loss of customer trust, and reputational damage. In regions like Saudi Arabia and the UAE, where technological innovation is tightly linked to economic growth and national development goals, ensuring that AI systems are fair and unbiased is crucial for sustainable business success.

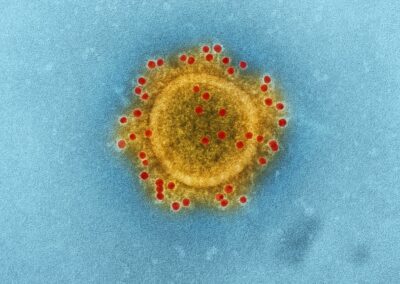

However, the rise of AI has also brought attention to significant ethical concerns, particularly the issue of bias in AI model outputs. Bias in AI occurs when the algorithms produce results that unfairly favor or disadvantage certain groups. This can happen due to the data used in training, which may reflect existing societal biases, or due to flaws in the algorithm design. For businesses operating in Riyadh, Dubai, and other major cities, addressing AI bias is not just an ethical imperative but also a business necessity.

To mitigate these risks, it is essential to develop robust strategies for detecting and correcting AI bias. By implementing proactive measures, businesses can ensure that their AI-driven processes contribute to equitable outcomes and align with broader societal values. This approach not only protects against the negative consequences of biased AI but also enhances the organization’s reputation as a leader in ethical technology adoption.

Techniques for Detecting AI Bias

Detecting bias in AI models is the first step toward ensuring that these systems produce fair and accurate results. One of the most effective techniques for bias detection is the use of fairness metrics. These metrics help evaluate how different groups are treated by the AI model, allowing developers to identify any disparities in the model’s predictions. For instance, metrics such as demographic parity, equalized odds, and disparate impact can reveal whether the AI model is disproportionately benefiting or disadvantaging certain groups based on race, gender, or other characteristics.

In addition to fairness metrics, another critical technique for detecting bias is the application of model interpretability tools. These tools provide insights into how AI models make decisions, helping developers understand the underlying factors that influence the model’s predictions. By analyzing these factors, developers can identify potential sources of bias and take corrective actions. For businesses in Saudi Arabia and the UAE, where AI is increasingly used in sectors such as finance, healthcare, and government services, ensuring that AI models are transparent and interpretable is key to maintaining public trust and regulatory compliance.

Data Preprocessing and Bias Mitigation

Once bias has been detected in an AI model, the next step is to implement strategies to correct it. One of the most effective methods for bias correction is data preprocessing, which involves adjusting the training data to reduce or eliminate biases before the AI model is trained. This can include techniques such as reweighting, resampling, or modifying the data to ensure that all groups are fairly represented. For example, in a hiring algorithm, ensuring that the training data includes a diverse range of candidates from different backgrounds can help prevent the model from favoring any particular demographic.

Another approach to bias correction is the use of algorithmic fairness constraints during the model training process. These constraints are designed to ensure that the model’s predictions adhere to predefined fairness criteria, such as equal opportunity or demographic parity. By incorporating these constraints, developers can guide the model to produce fairer outcomes while maintaining overall accuracy. In regions like Saudi Arabia and the UAE, where AI is playing an increasingly important role in decision-making processes, applying fairness constraints can help align AI systems with societal values and legal requirements.

#AI, #EthicalAI, #AIBias, #BiasMitigation, #BusinessSuccess, #LeadershipInAI, #SaudiArabiaInnovation, #UAETechnology, #ExecutiveCoaching, #ChangeManagement, #Riyadh, #Dubai