Understanding the Importance of Tokenization in IoT Data Processing

What is Tokenization and How Does It Protect IoT Data?

Implementing tokenization in IoT data processing is a critical strategy for protecting sensitive information in an increasingly connected world. Tokenization involves replacing sensitive data, such as personal identifiers or financial details, with non-sensitive equivalents known as tokens. These tokens are meaningless on their own and can only be mapped back to the original data through a secure tokenization system. This process ensures that sensitive information remains protected, even if unauthorized access occurs.

In regions like Saudi Arabia and the UAE, where IoT adoption is accelerating across various sectors, the security of data is paramount. Cities such as Riyadh and Dubai, which are leading the charge in smart city initiatives, must prioritize the protection of sensitive information as they expand their IoT infrastructures. By using tokenization, organizations can significantly reduce the risk of data breaches, ensuring that even if IoT systems are compromised, the exposed data is of no value to cybercriminals.

Tokenization differs from encryption in that it does not require the original data to be mathematically reversible, thereby reducing the complexity and computational requirements. This makes tokenization particularly well-suited for IoT environments, where processing power and resources may be limited. By understanding the benefits and mechanisms of tokenization, businesses can implement this technology to safeguard their IoT data effectively.

Challenges of Securing IoT Data Without Tokenization

Without tokenization, IoT data processing is susceptible to various security risks, particularly when handling sensitive information. Traditional encryption methods, while effective, can be resource-intensive and may not be practical for all IoT devices, especially those with limited computational capabilities. Additionally, encrypted data, if compromised, can still be a target for decryption efforts, potentially exposing sensitive information.

In Saudi Arabia and the UAE, where IoT networks are integral to industries such as healthcare, finance, and public services, the security of data is a top priority. Cities like Riyadh and Dubai rely on IoT systems to manage critical infrastructure, making the protection of this data essential for national security and public trust. However, the lack of effective data protection measures, such as tokenization, can lead to significant vulnerabilities.

One of the primary challenges is ensuring that data remains secure throughout its lifecycle, from collection and processing to storage and transmission. IoT devices often operate in distributed environments, making it difficult to maintain consistent security protocols. Without tokenization, sensitive data can be exposed during these processes, increasing the risk of unauthorized access and data breaches. By adopting tokenization, organizations can mitigate these risks, ensuring that sensitive information is protected at all stages of data processing.

Implementing Tokenization in IoT Networks

To effectively implement tokenization in IoT data processing, organizations should follow best practices that ensure the security and efficiency of their tokenization systems. One key practice is to use a centralized tokenization server, which manages the generation and mapping of tokens. This server should be highly secure, with strong access controls and encryption to protect the tokenization process itself from unauthorized access.

In regions like Riyadh and Dubai, where IoT networks are expansive and complex, a centralized tokenization server can provide a scalable solution for managing sensitive data across multiple devices and systems. By centralizing the tokenization process, organizations can streamline data protection efforts, ensuring that all sensitive information is consistently tokenized and secure.

Another best practice is to implement tokenization at the earliest possible stage in the data processing workflow. By tokenizing data at the point of collection, organizations can prevent sensitive information from being exposed during subsequent processing or transmission. This approach is particularly important in IoT environments, where data is often transmitted across networks and stored in various locations. By tokenizing data at the source, organizations can minimize the risk of exposure and ensure that sensitive information remains protected throughout its lifecycle.

Best Practices for Tokenization Implementation in IoT Networks

Ensuring Compliance with Regulatory Requirements

One of the critical considerations when implementing tokenization in IoT data processing is ensuring compliance with relevant regulatory requirements. Many regions, including Saudi Arabia and the UAE, have strict data protection laws that govern how sensitive information must be handled and secured. By adopting tokenization, organizations can meet these regulatory requirements while also enhancing the security of their IoT networks.

In tech-forward cities like Riyadh and Dubai, where regulatory compliance is crucial for maintaining public trust and avoiding legal penalties, tokenization offers a practical solution for securing sensitive data. By tokenizing personal and financial information, organizations can ensure that they comply with regulations such as GDPR, which mandate the protection of data and the prevention of unauthorized access.

Organizations should also regularly review and update their tokenization processes to ensure continued compliance with evolving regulations. This involves staying informed about changes in data protection laws and implementing necessary updates to tokenization systems. By maintaining a proactive approach to compliance, businesses can avoid potential legal issues and protect their reputations in the marketplace.

Leveraging AI and Blockchain for Enhanced Security

Artificial Intelligence (AI) and blockchain technology can play a significant role in enhancing the effectiveness of tokenization in IoT networks. AI-driven analytics can help monitor and analyze tokenization processes, identifying potential vulnerabilities and optimizing security protocols. Machine learning algorithms can also predict and respond to emerging threats, ensuring that tokenization systems remain robust and effective.

In cities like Riyadh and Dubai, where cutting-edge technology is a cornerstone of smart city initiatives, integrating AI into tokenization systems can provide significant security benefits. AI-powered tools can automate the monitoring of tokenization processes, reducing the risk of human error and ensuring that sensitive data remains protected at all times.

Blockchain technology offers an additional layer of security by providing a decentralized and immutable ledger for recording tokenization events. This ensures that the tokenization process is transparent, tamper-proof, and verifiable. By integrating blockchain with tokenization, organizations can create a secure and auditable record of data protection efforts, further enhancing the security of their IoT networks.

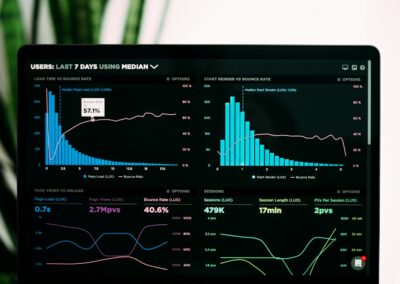

Continuous Monitoring and Improvement

Implementing tokenization is not a one-time task but an ongoing process that requires continuous monitoring and improvement. Organizations must regularly assess the effectiveness of their tokenization systems, making adjustments as needed to address new security challenges. This involves monitoring tokenization logs, analyzing security incidents, and staying informed about emerging threats.

In regions like Riyadh and Dubai, where the threat landscape is constantly evolving, continuous monitoring is essential for maintaining the security of IoT networks. By adopting a proactive approach to tokenization, organizations can identify and address potential vulnerabilities before they are exploited. This ongoing vigilance ensures that sensitive information remains protected and that the security of IoT networks is maintained.

Additionally, organizations should regularly update their tokenization systems to incorporate the latest security features and technologies. As new threats emerge, it is crucial to stay ahead of potential attackers by continuously enhancing the security of IoT devices. By committing to continuous improvement, businesses in Saudi Arabia and the UAE can ensure that their IoT networks remain secure and resilient in the face of evolving cyber threats.

Conclusion

Using tokenization in IoT data processing is essential for protecting sensitive information and ensuring the security of connected systems. By leveraging best practices such as centralized tokenization servers, early data tokenization, and compliance with regulatory requirements, businesses in Saudi Arabia, the UAE, Riyadh, and Dubai can strengthen the security of their IoT networks. Embracing AI and blockchain technologies, along with a commitment to continuous monitoring and improvement, will further enhance these efforts, driving long-term business success in an increasingly connected world.

—

#Tokenization #IoTDataProcessing #ProtectingSensitiveInformation #BestPracticesForTokenization #IoTSecurity #SaudiArabiaTechnology #UAEInnovation #AIInIoTSecurity #BlockchainForDataProtection #BusinessSuccess #LeadershipInTechnology #ProjectManagement