Utilizing Pruning Techniques to Prevent Overfitting in Decision Trees

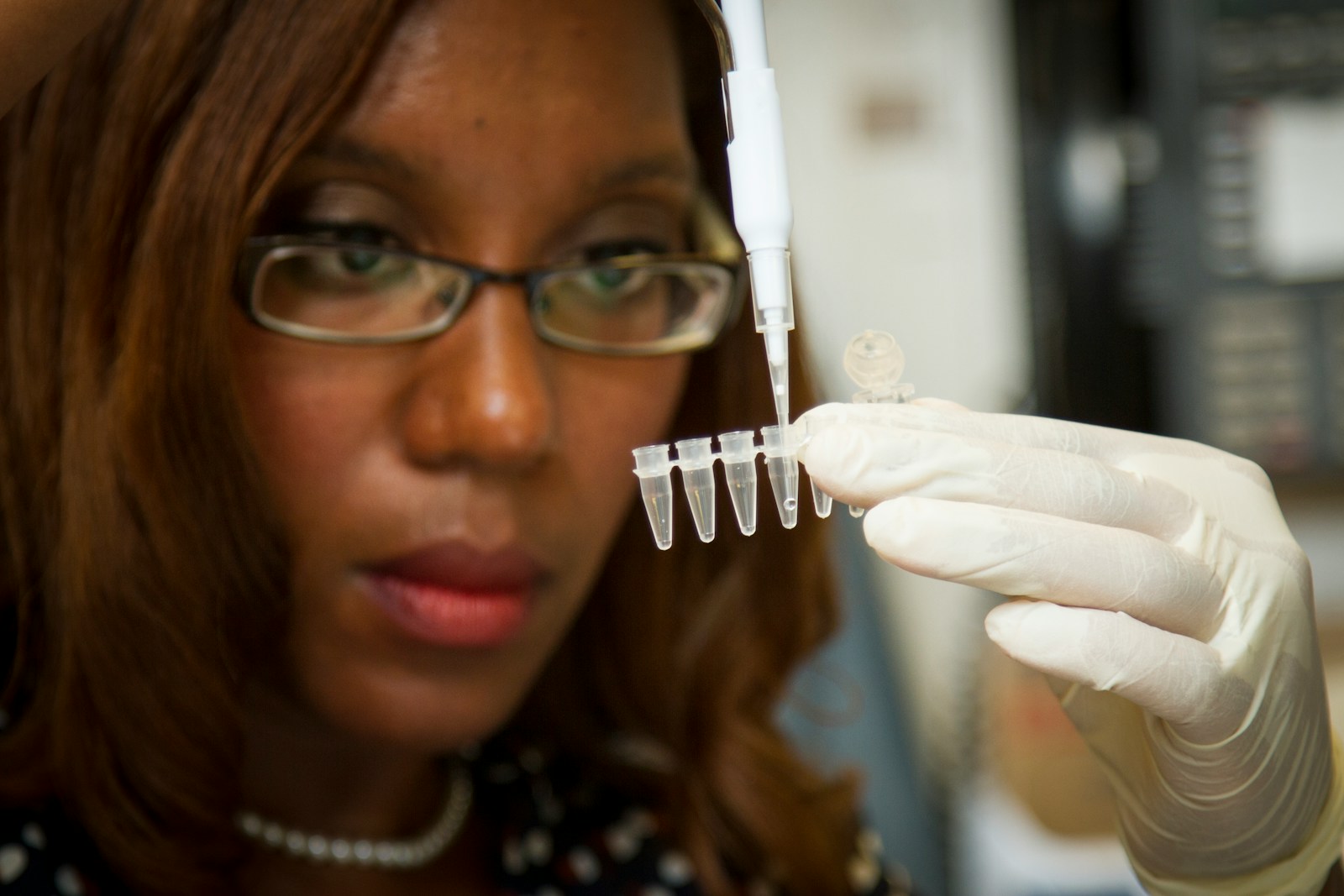

The Power of Decision Trees in Delivering Interpretable Machine Learning Models

Decision trees stand out as one of the most effective tools in this regard, offering a clear and understandable approach to predictive modeling. Unlike some complex algorithms, decision trees provide a visual representation of decision paths, making it easier for business executives and managers to grasp the rationale behind predictions. This interpretability is crucial in sectors like finance, healthcare, and retail, where understanding the “why” behind a model’s decision is just as important as the accuracy of the decision itself.

For leaders in Riyadh and Dubai, the ability to interpret machine learning models can enhance trust and adoption of AI-driven solutions. Decision trees break down the decision-making process into a series of binary choices, each representing a step in the reasoning process. This step-by-step approach allows business professionals to see how specific inputs influence outcomes, enabling them to make informed decisions based on the model’s predictions. In industries where regulatory compliance and transparency are critical, such as financial services, the clarity provided by decision trees can be particularly beneficial. This transparency not only fosters trust in the model’s outputs but also facilitates communication between data scientists and business stakeholders.

Moreover, decision trees are inherently flexible and can be applied to a wide range of business problems, from customer segmentation to risk assessment. Their adaptability makes them a valuable asset in the dynamic markets of Saudi Arabia and the UAE, where businesses must quickly respond to changing conditions. By leveraging decision trees, companies can create models that not only provide accurate predictions but also offer insights that are easy to interpret and act upon. This capability is essential for maintaining a competitive edge in a fast-paced, technology-driven world.

Pruning Techniques for Preventing Overfitting in Decision Trees

While decision trees are powerful tools for creating interpretable machine learning models, they are also prone to overfitting—a scenario where the model becomes too complex and starts capturing noise in the data rather than the underlying patterns. Overfitting can lead to poor generalization to new, unseen data, which is a significant concern for businesses that rely on predictive models for strategic decision-making. To mitigate this risk, pruning techniques are employed to simplify the decision tree and improve its generalization capabilities.

One of the most common pruning techniques is post-pruning, where the tree is fully grown and then pruned back by removing branches that provide little to no value in predicting the target variable. This process involves evaluating the impact of each branch on the model’s accuracy and removing those that do not contribute meaningfully. For business leaders in Riyadh and Dubai, post-pruning ensures that the decision tree remains interpretable while reducing the likelihood of overfitting. This balance between simplicity and accuracy is crucial in high-stakes environments where decisions based on overfitted models could lead to significant financial losses or missed opportunities.

Another effective pruning technique is pre-pruning, which involves setting constraints during the tree-building process to prevent the tree from growing too large. Parameters such as maximum depth, minimum samples per leaf, and minimum samples per split can be adjusted to control the complexity of the tree. By limiting the growth of the decision tree, pre-pruning helps prevent overfitting from the outset. This approach is particularly useful in industries where computational efficiency is critical, as it reduces the time and resources required to train the model. For businesses in fast-paced markets like Saudi Arabia and the UAE, pre-pruning offers a way to maintain both model accuracy and operational efficiency.

Additionally, cross-validation is often used in conjunction with pruning techniques to further enhance the robustness of decision trees. By splitting the dataset into multiple subsets and validating the model on each subset, cross-validation provides a more accurate estimate of the model’s performance on unseen data. This process helps identify the optimal level of pruning, ensuring that the decision tree generalizes well to new data while remaining interpretable. For companies looking to deploy AI solutions in complex and dynamic environments, cross-validation combined with pruning offers a reliable method for building robust and trustworthy models.

#AI, #DecisionTrees, #MachineLearning, #DataAnalysis, #BusinessSuccess, #SaudiArabia, #UAE, #Riyadh, #Dubai, #LeadershipSkills, #ProjectManagement