Optimizing AI Performance Through Strategic Hyperparameter Tuning

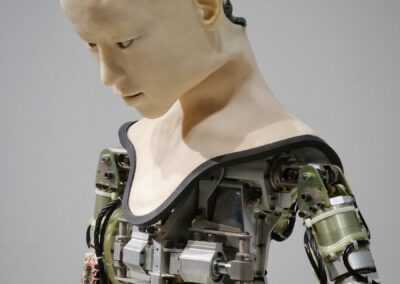

Understanding the Importance of Hyperparameter Tuning in Deep Neural Networks

In the rapidly evolving field of artificial intelligence (AI), the role of hyperparameter tuning in deep neural networks has become a crucial factor for businesses aiming to maximize the performance of their AI models. Hyperparameters, which are the settings or configurations used to control the learning process of a model, play a pivotal role in determining the efficiency and effectiveness of deep neural networks. For businesses in regions like Saudi Arabia and the UAE, where the adoption of AI is accelerating across various sectors, understanding and implementing hyperparameter tuning is essential for achieving optimal results in AI applications.

Hyperparameter tuning involves selecting the best set of hyperparameters to enhance the performance of a neural network. Unlike model parameters, which are learned during training, hyperparameters are predefined and must be carefully chosen to ensure that the model learns effectively. These hyperparameters include learning rates, batch sizes, the number of layers, and activation functions, among others. The challenge lies in the fact that deep neural networks are highly sensitive to these settings; even slight adjustments can significantly impact the model’s accuracy and generalization capabilities. For businesses in fast-paced markets like Riyadh and Dubai, where rapid innovation and accurate decision-making are vital, mastering hyperparameter tuning can lead to a competitive advantage.

Moreover, the role of hyperparameter tuning in deep neural networks extends beyond merely improving performance metrics. It also plays a critical role in preventing issues such as overfitting and underfitting, which can compromise the reliability of AI models. Overfitting occurs when a model becomes too specialized in the training data, performing well on this data but poorly on new, unseen data. On the other hand, underfitting happens when a model is too simplistic and fails to capture the underlying patterns in the data. By carefully tuning hyperparameters, businesses can strike the right balance between complexity and generalization, ensuring that their AI models are robust and reliable across various applications.

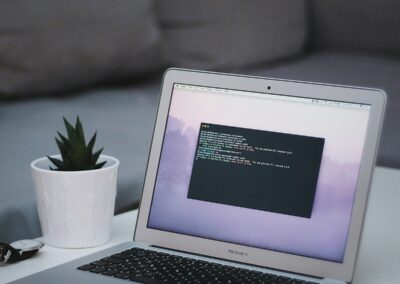

Implementing Hyperparameter Tuning for Business Success

To fully leverage the role of hyperparameter tuning in deep neural networks, businesses must adopt a strategic approach that aligns with their specific goals and resources. One of the most effective methods for hyperparameter tuning is grid search, which involves systematically testing different combinations of hyperparameters to identify the best configuration. While this approach can be time-consuming, it is particularly valuable for businesses that require high-precision models, such as those in healthcare, finance, or manufacturing. In regions like Saudi Arabia and the UAE, where industries are increasingly reliant on AI-driven insights, grid search can help companies develop models that deliver accurate and actionable results.

Another important technique is random search, which randomly selects combinations of hyperparameters to evaluate. This method can be more efficient than grid search, especially when the search space is large. Random search is particularly useful in scenarios where businesses need to deploy AI models quickly and do not have the luxury of extensive computational resources. For companies in Riyadh and Dubai, where time-to-market can be a critical factor, random search offers a practical solution for optimizing hyperparameters without compromising on model performance. By incorporating random search into their AI development processes, businesses can accelerate the deployment of high-performing models and maintain their competitive edge.

Automated hyperparameter tuning methods, such as Bayesian optimization, are also gaining popularity in the AI community. These methods use probabilistic models to predict the performance of different hyperparameter configurations, allowing businesses to efficiently explore the search space and identify optimal settings. For executives and entrepreneurs in the Middle East, where AI is a key driver of business success, adopting automated hyperparameter tuning can lead to significant improvements in model performance while reducing the time and effort required for manual tuning. This approach not only enhances the efficiency of AI projects but also frees up valuable resources that can be allocated to other strategic initiatives.

In conclusion, the role of hyperparameter tuning in deep neural networks is a critical factor in the successful training and deployment of AI models. For business leaders in Saudi Arabia, the UAE, and beyond, understanding and implementing effective hyperparameter tuning techniques is essential for achieving optimal AI performance. By adopting methods such as grid search, random search, and automated tuning, businesses can develop models that are not only accurate but also robust and reliable. As AI continues to shape the future of business, the ability to strategically tune hyperparameters will be a key determinant of success in an increasingly competitive and data-driven world.

#topceo2024 #HyperparameterTuning #DeepLearning #AIOptimization #BusinessSuccess #LeadershipDevelopment #AIinMiddleEast #SaudiArabiaAI #UAEAI #ExecutiveCoaching #ProjectManagement